Open-Web-UI for Azure AI LLMs

If ChatGPT is for OpenAI models, OpenWebUI is for open-source models !

With that, lets see how we can get started with OpenWebUI and get a ChatGPT style interface for open-source and vendor-sourced (azure, gcp, aws hosted models).

You can also use openai api-key and use this interface for openai models as well. So, practically, you dont even need ChatGPT at all. One interface for openai and ALL other models just at one place.

Step 1 — Install the docker, and provision the openwebui provided docker container. Take the reference of their official documentation and pick the right container.

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Once you run the docker command, check that the container is up and running.

That’s it. You are practically done, and can literally lauch the openwebui on your browser at :

http://(hostname):3000

For the first time, it will ask you to register as an admin. Follow the wizard.

Once done, login.

Now, if you don’t know about Ollama, go through my previous blog(s) —

Basically, if you have your ollama up and running, and open source models already deployed, then it will automatically reflected on open-web-ui.

Here -

Before we proceed to integrate Azure AI models in openwebui, first quickly also deploy watchtower, that will always ensure that your openwebui is running on an updated version.

Just run this docker command, and its setup for you.

docker run -d --name watchtower \

--volume /var/run/docker.sock:/var/run/docker.sock \

containrrr/watchtower -i 300 open-webui

Now, lets see how we can integrate models deployed on Azure AI platform with openwebui interface.

Here is the reference article, but you can just follow my steps for better step by step approach.

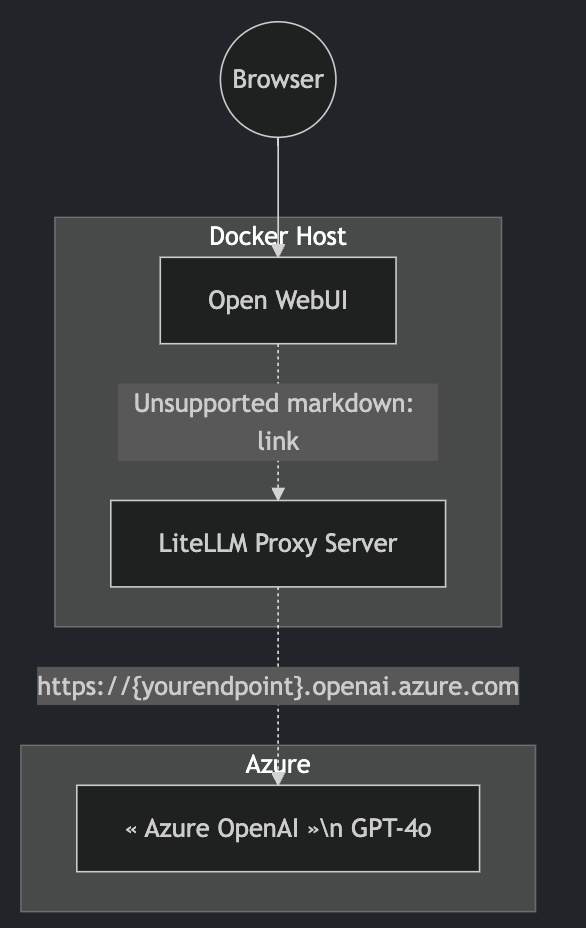

Basicall, we use a tool called LiteLLM which will act as a router between openwebui and azure platform by providing a chatgpt like api to call the models deployed on azure.

Assuming you already have deployed openai and non-openai models on Azure AI platform.

First go to your azure deployment and collect these connection details.

And export these environment variables on your machine.

export AZURE_API_BASE=

export AZURE_API_KEY=

export AZURE_API_VERSION=export AZURE_API_BASE_R1=

export AZURE_API_KEY_R1=

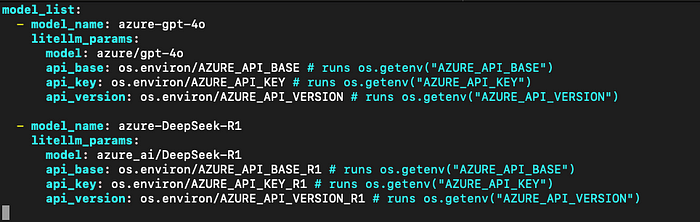

export AZURE_API_VERSION_R1=Then create a file called litellm_config.yaml and update the file with this content.

VERY IMPORTANT NOTE —

If the model is openai model, you need to use : model: azure/(modelname)

If the model is non-openai model, then use : model: azure_ai/(modelname)

model_list:- model_name: azure-gpt-4o

litellm_params:

model: azure/gpt-4o

api_base: os.environ/AZURE_API_BASE # runs os.getenv("AZURE_API_BASE")

api_key: os.environ/AZURE_API_KEY # runs os.getenv("AZURE_API_KEY")

api_version: os.environ/AZURE_API_VERSION # runs os.getenv("AZURE_API_VERSION") - model_name: azure-DeepSeek-R1

litellm_params:

model: azure_ai/DeepSeek-R1

api_base: os.environ/AZURE_API_BASE_R1 # runs os.getenv("AZURE_API_BASE")

api_key: os.environ/AZURE_API_KEY_R1 # runs os.getenv("AZURE_API_KEY")

api_version: os.environ/AZURE_API_VERSION_R1 # runs os.getenv("AZURE_API_VERSION")

Then simply run this command to deploy the Lite-LLM docker container with provided environment variables connection details.

docker run -d \

-v $(pwd)/litellm_config.yaml:/app/config.yaml \

-e AZURE_API_KEY=$AZURE_API_KEY \

-e AZURE_API_BASE=$AZURE_API_BASE \

-e AZURE_API_VERSION=$AZURE_API_VERSION \

-e AZURE_API_KEY_R1=$AZURE_API_KEY_R1 \

-e AZURE_API_BASE_R1=$AZURE_API_BASE_R1 \

-e AZURE_API_VERSION_R1=$AZURE_API_VERSION_R1 \

-p 4000:4000 \

--name litellm-proxy \

--restart always \

ghcr.io/berriai/litellm:main-latest \

--config /app/config.yaml --detailed_debug

Check that the container is up and running.

And you are practically done with connection your host server with Azure LLMs. (not openwebui yet).

But just to validate that from your host server you are able to call Azure hosted models, run this curl command.

In this command, you are simply calling the Lite-LLM docker container, and that container is calling the Azure AI model, because it already has all the connection details.

curl --location 'http://0.0.0.0:4000/chat/completions' \

--header 'Content-Type: application/json' \

--data '{

"model": "azure-gpt-4o",

"messages": [

{

"role": "user",

"content": "What is the purpose of life?"

}

]

}'

Check for non-openai model connection:

Cool.

Now, lets connect Openwebui interface with these azure models.

Go to Admin Panel -

Now go to Admin Settings.

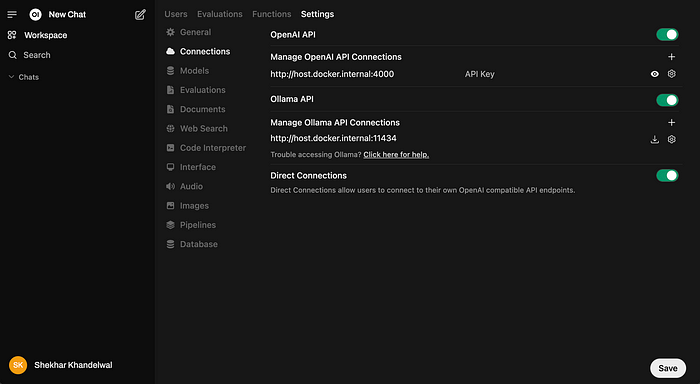

Go to connections.

Here, if you simply provide openai apikey, all openai models will be available in the models dropdown.

But lets focus on Azure AI models. You can simply upadte the openai connection URL or add a new connection by clicking on the + icon in front of ‘Manage openai API connections’.

Lets update the OpenAI API Endpoint as follows:

- Endpoint:

http://host.docker.internal:4000 - Secret:

AnyDummyValue

And you are all set.

If you go back to home page and open a chat window, in the drop down you will start seeing all the models that you might have configured while creating the LiteLLM container.

Test the connection.

Happy Learning !