Open-interpreter : using locally deployed LLM.

Open interpreter github page — https://github.com/KillianLucas/open-interpreter/

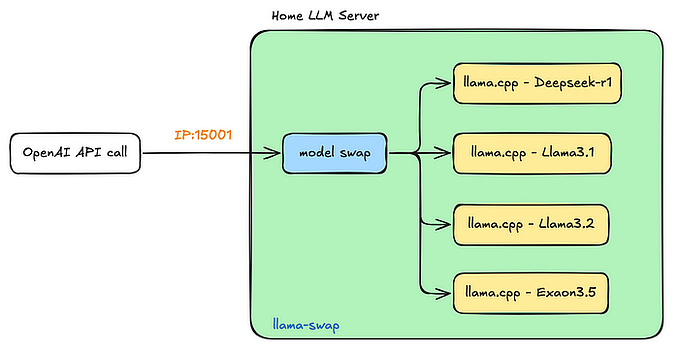

To use open-source locally deployed model with open-interpretor, we would need LLM to be deployed on your local machine. We can use wither LM Studio or Ollama for the same.

Ollama — https://medium.com/@khandelwal-shekhar/deploy-llm-locally-using-ollama-277136e4b1f6

LMStudio — https://lmstudio.ai/

Install open-interpretor -

pip install open-interpreterOpen terminal, and write interpreter -

It asks for openAI api key. So, by default it runs using OpenAI api. But, if you want to run it locally, then run -

interpreter --local

For this to run, you must deploy LLM using LMStudio on your machine.

Now ask a question on interpreter terminal -

You can ask the interpreter to all kind of tasks, ranging from writing and executing code, writing content, blogs etc. For better responses, its important to use specific LLMs for specific tasks. Also, bigger the LLM, better the resposes. Hence, quality of the task performance deplends highly on your machine’s computing power, since in such scenario, you are running the LLM locally.

Python wrapper for open-interpreter

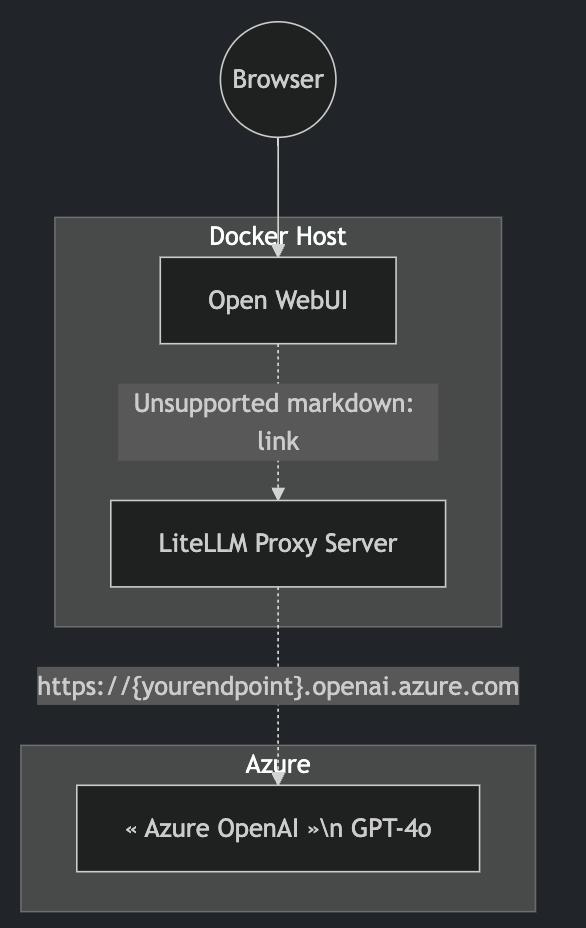

To replicate --local and connect to LM Studio, use these settings:

from interpreter import interpreter

interpreter.offline = True # Disables online features like Open Procedures

interpreter.llm.model = "openai/x" # Tells OI to send messages in OpenAI's format

interpreter.llm.api_key = "fake_key" # LiteLLM, which we use to talk to LM Studio, requires this

interpreter.llm.api_base = "http://localhost:1234/v1" # Point this at any OpenAI compatible serverHappy Learning !